The Machine Creation Services or MCS for short is a technology provided by Citrix that enables provisioning of OS images using Hypervisors. This technology allows the distribution of many computers across the enterprise using the disks available in the hypervisor to provide the images.

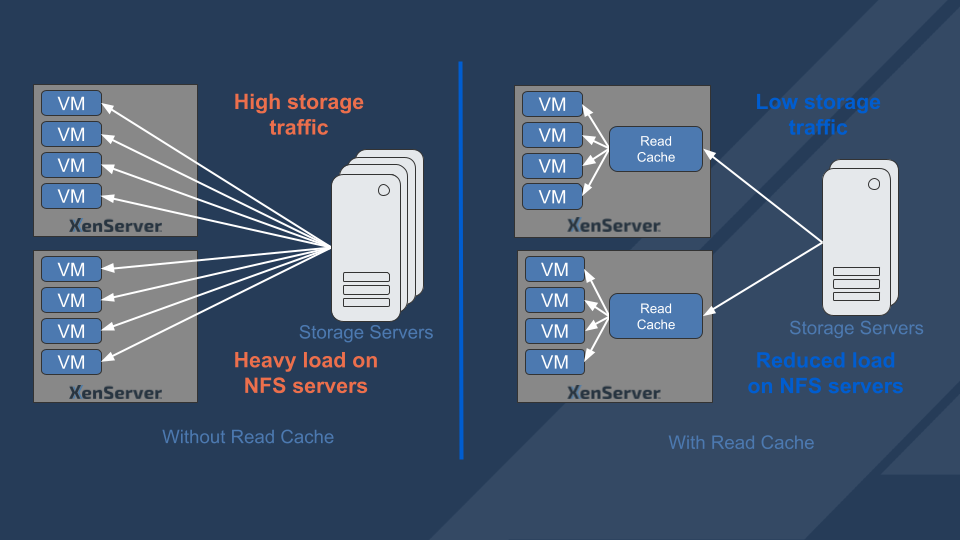

As previously discussed in the article about IntelliCache, MCS is dependent on storage to deliver the images. This means that the load on the remote storage can be high and needs to be taken into account in the designs for the deployment.

This can cause a bottleneck when events like boot storms occur as each VM is reading all its data from the remote storage

How can Read Cache help?

Using the XenServer platform with Read Cache to provide the MCS workloads can not only make significant improvements on the storage load, but can result in much faster VM performance too.

How does Read caching work?

XenServer provides a feature called Storage Read Cache to help reduce the load on the remote storage by making use of the memory in the hypervisor hosts to provide a read cache of the data being read from the master image and can significantly reduce the load on the central storage and utilize the much faster read times that memory provides.

This will optimize storage load for machines created using MCS when thin provisioned onto storage without impacting the agility of the machines. The VMs can be started on any host that has access to the storage being used, the cache will be recreated using memory on whichever host is selected.

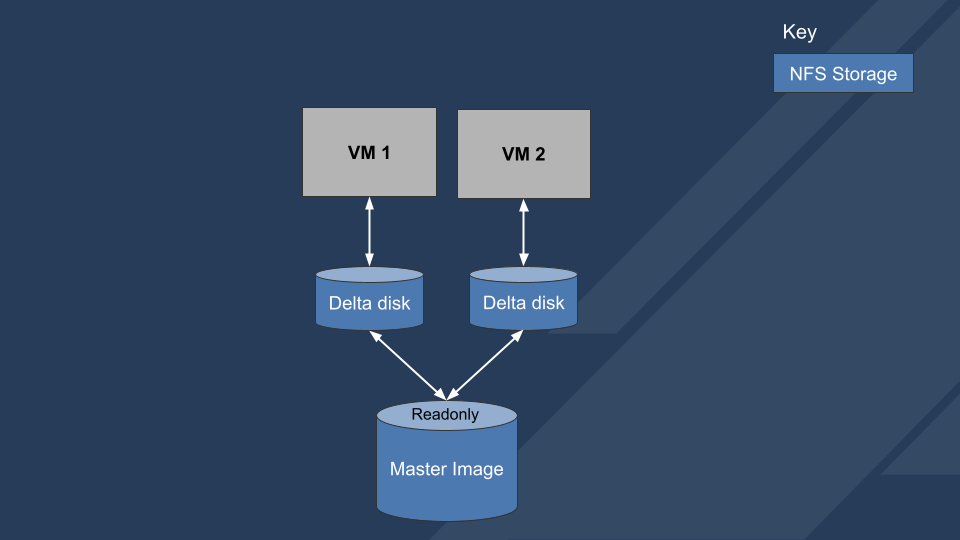

When using MCS you are provisioning and distributing many identical machines, as long as you are not using a full clone method they all share a common master image. All these machines will read their disk content directly from the storage that they are provisioned onto.

VMs Provisioned with MCS using the thin clone technology will look like this.

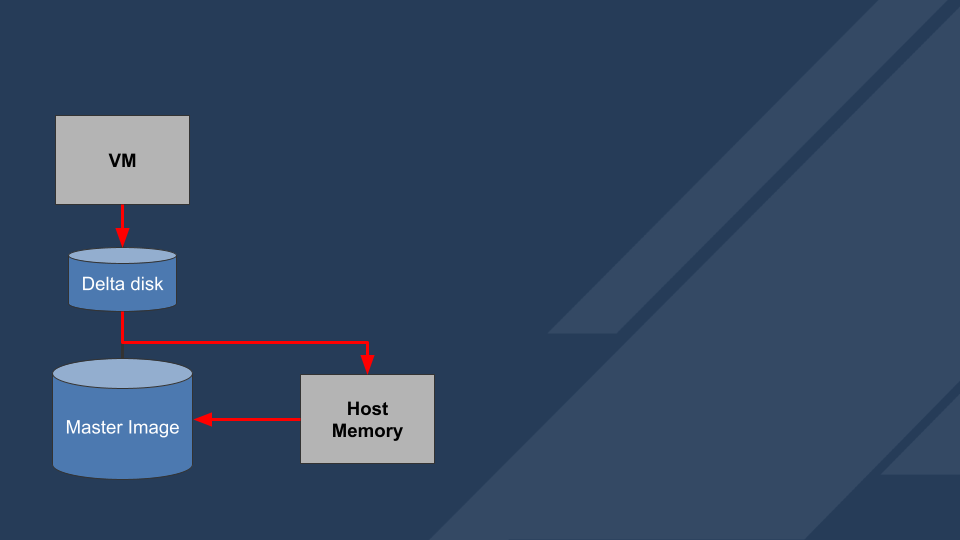

When using read cache the read paths for the data will look like this, diverting load from the remote storage to local storage.

This is all transparent which means as far as the VM is concerned it is still using disks on the NFS server which allows them to continue to be load balanced across the hosts in the XenServer pool and needs no maintenance or management by the administrator.

The memory cache is shared amongst all master images so booting machines from different master images will compete for use of the memory. The memory stores the latest reads from the shared storage so if there is not enough memory to store all reads newer reads will flush older data from the cache and require it to be re-read from the shared storage, So to make sure you get the expected benefits you need to be sure that there is enough memory available to store the required data to provide the ‘working data set’ that you need to cache the frequently accessed data.

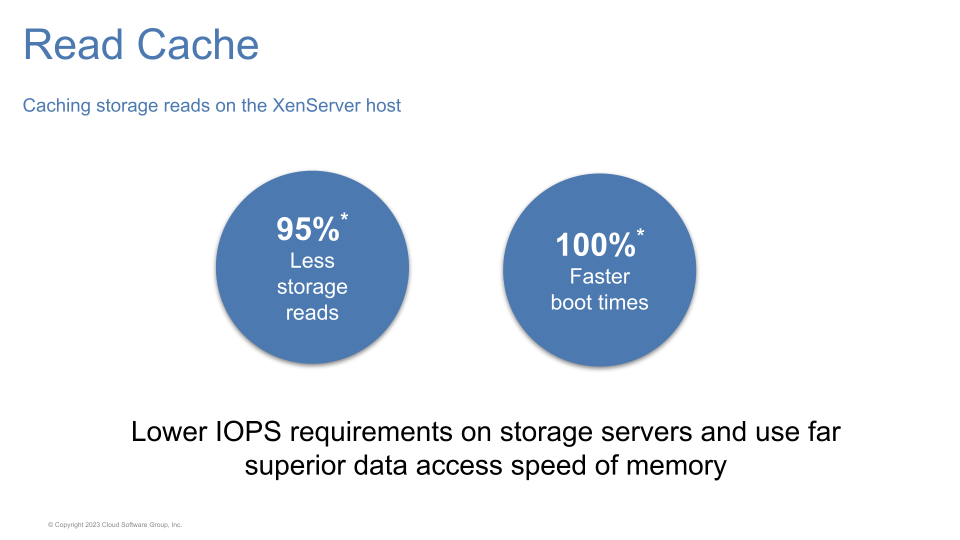

So how much of a difference does this make?

I created 5 machines using the pooled model where the machines reset back to a clean state on each boot.

Booting these 5 machines from the NFS server at the same time took 5 minutes and was spiking reading well over 100 MB/s and was between 20 and 60 MB/s for many for much of that time.

Repeating the boot for the same machines with the read cache turned on and configured with enough memory to cache the disk contents took little over 1 minute and made almost no read requests to the nfs server

You can see a video of this below:

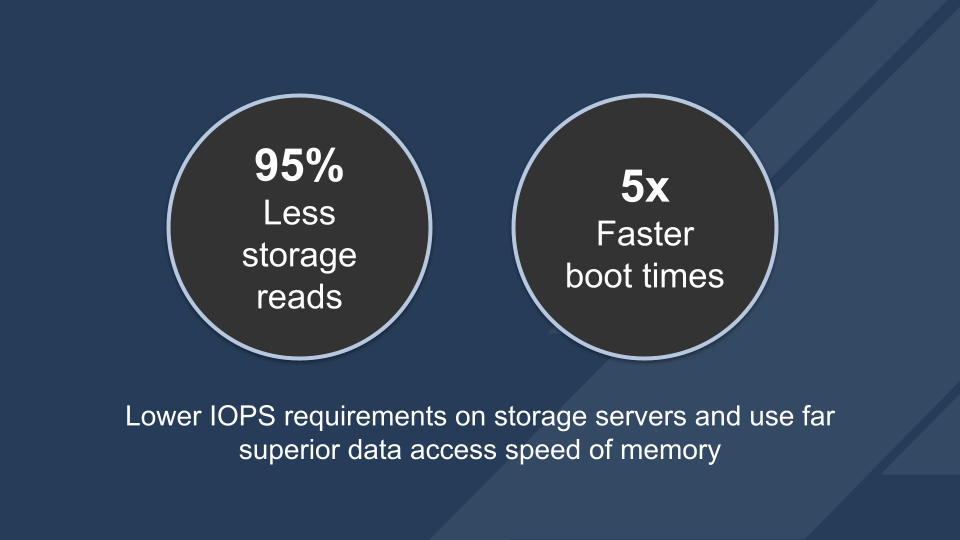

This reduced boot time by 5x, reduced reads on shared storage to practically zero on the NFS storage.

* dependent on the amount of memory allocated to the read cache and number of images being cached on the same host.

How hard is this to use?

Read caching is turned on by default so works out of the box, you will need to adjust the memory size in Dom0 to make it big enough to hold the data needed for your images and make sure that this is the case for all the hosts in the pool that you want to use the cache for. The Dom0 memory resizing process is documented here https://docs.xenserver.com/en-us/xenserver/8/memory-usage#change-the-amount-of-memory-allocated-to-the-control-domain

You can test it out yourself in the Trial Edition available here https://www.xenserver.com/downloads#download or watch this video to see how effective the read cache is in action and see how simple it is to configure it.

Useful links

Read cache documentation:

Adjusting the memory configuration for Dom0 :